From “Attention Is All You Need” to AI Agents

In 2017, a groundbreaking paper titled “Attention Is All You Need” introduced the transformer architecture, a neural network design that revolutionized artificial intelligence. By enabling machines to process data sequences (like text) in parallel through self-attention mechanisms, transformers unlocked the era of Large Language Models (LLMs) like GPT-4 and Gemini. These models excel at understanding context, generating human-like text, and reasoning—but they’re just the beginning. Today, AI agents are taking this revolution further, especially in industries like maritime, where precision, safety, and efficiency are non-negotiable.

The evolution: From LLMs to AI agents

1. The LLM revolution

Transformers made LLMs possible by allowing models to dynamically weigh the importance of different words in a sentence. For example, when analyzing a ship’s log, an LLM can highlight critical terms like “engine anomaly” or “weather delay” to prioritize actions. However, LLMs have limitations:

- No memory: Each query is treated as new.

- No real-time data access: Trained on static datasets.

- Hallucinations: Risk of generating incorrect facts.

2. AI agents: Bridging the gap

AI agents are autonomous systems that augment LLMs by integrating tools like web APIs, databases, and mathematical engines. They overcome LLM limitations by:

- Memory retention: Storing past interactions (e.g., tracking a vessel’s maintenance history).

- Real-time data access: Pulling live weather feeds or port congestion updates.

- Specialized tools: Using code interpreters for fuel efficiency calculations or satellite imagery analysis.

What are AI agents?

An AI agent is an autonomous system that perceives its environment, reasons about goals, and acts to achieve objectives using tools like APIs, databases, or physical devices. Unlike standalone LLMs, agents combine:

- Planning: Deciding sequences of actions.

- Memory: Storing and retrieving past experiences.

- Tool Use: Interfacing with external systems (e.g., calculators, APIs).

- Learning: Adapting behavior via feedback (reinforcement learning, fine-tuning)

Core technical components

1. Architecture

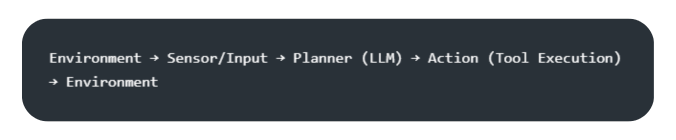

Modern AI agents often follow a neuro-symbolic framework:

- Neural component: LLMs (e.g., GPT-4, Claude) handle language understanding, reasoning, and generating plans.

- Symbolic component: Rule-based systems or code executors validate outputs (e.g., ensuring compliance with maritime regulations).

Example workflow:

2. Planning & reasoning

Agents use algorithms like:

- Chain-of-Thought (CoT): Break tasks into step-by-step reasoning. E.g., Diagnosing engine failure: “Sensor X is faulty → Check maintenance logs → Notify engineer.”

- Tree-of-Thought (ToT): Explore multiple reasoning paths in parallel.

- React framework: Reason (LLM generates hypotheses) → Act (call tools to verify hypotheses).

3. Memory systems

- Short-term memory: Context windows (e.g., GPT-4’s 128k tokens) store recent interactions.

- Long-term memory: Vector Databases: Embeddings of past interactions (e.g., FAISS, Pinecone) for semantic search. SQL/Graph Databases: Structured storage of facts (e.g., vessel routes, crew certifications).

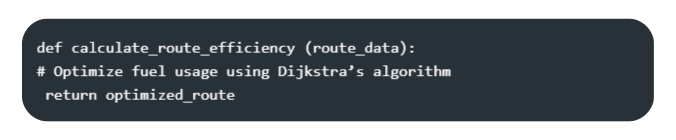

4. Tool execution

Agents interact with tools via:

- Function calling: LLMs output structured requests (JSON) to trigger pre-defined functions. Example: def calculate_route_efficiency (route_data): # Optimize fuel usage using Dijkstra’s algorithm return optimized_route

- APIs: Integrate live data (e.g., weather APIs, AIS ship-tracking).

- Code interpreters: Execute Python/SQL for tasks like data analysis or simulations.

Key algorithms & frameworks

1. Reinforcement Learning (RL)

Agents optimize actions via reward signals (e.g., minimize fuel consumption):

- PPO (Proximal Policy Optimization): Fine-tune LLM policies for maritime-specific tasks.

- Q-Learning: Train agents to choose optimal tools (e.g., “Use weather API before route planning”).

2. Modular design patterns

- Toolformer: LLMs learn to invoke tools during training (e.g., “Call API X when asked about port delays”).

- HuggingGPT: Orchestrate multiple models (e.g., use Stable Diffusion for hazard visualization + LLM for report generation).

3. Multi-agent systems

- Decentralized Agents: Autonomous sub-agents collaborate (e.g., one agent monitors engine health, another optimizes cargo).

- Auctions/Coordination Protocols: Agents bid for resources (e.g., port slots) using game theory.

Technical challenges

- Hallucination Mitigation Guardrails: Validate outputs with rule engines (e.g., “Speed must be ≤20 knots in zone X”).

- Monte Carlo Sampling: Generate multiple responses, pick the majority-voted answer.

- Latency: Optimize token usage (e.g., FlashAttention for faster transformer inference).

- Security: Sandbox tool execution (e.g., Docker containers for code interpreters).

Example: Maritime AI agent pipeline

- Input: “Predict engine failure risk for vessel Y.”

- Perception: Pull real-time sensor data (RPM, temperature) and maintenance logs.

- Reasoning: LLM generates a causal graph linking sensor anomalies to failure modes.

- Action: Call a Prophet time-series model to forecast degradation. Query a knowledge graph of past failures.

- Output: “High risk (87%) of bearing failure in 14 days. Recommend inspection at next port.”

How AI agents empower maritime operations

At Ocean AI, we deploy agents tailored for maritime challenges. Here’s how they work:

Tools Agents Use

- Retrieval-Augmented Generation (RAG): Combines LLMs with maritime databases (e.g., IMO regulations, sensor data) to generate accurate, compliance-focused responses.

- Function calling: Agents execute tasks like rerouting ships based on real-time storm predictions or optimizing cargo loads using stability algorithms.

- Memory-augmented pipelines: Track crew schedules, maintenance cycles, and port regulations across voyages.

Maritime Applications

- Predictive Maintenance: Agents analyze sensor data from engines to predict failures weeks in advance, reducing downtime by 30%.

- Autonomous Navigation: Integrating AI agents with collision-avoidance systems to process radar, AIS, and weather data in real time.

- Document Intelligence: Our agent-driven platform, QDMS AI Search, uses embeddings to retrieve technical manuals or compliance documents in seconds.

The future: Agents as maritime partners

By 2025, the maritime AI market is projected to grow at 23% CAGR, driven by solutions like ours. At OceanAI, we’re pioneering:

- Crew optimization: IntelliCrew agents match crew members to vessels using historical data and contract terms.

- Emission reduction: Agents optimize routes and speeds, cutting CO2 emissions by up to 47 million tones annually.

- Security: AI-driven surveillance systems detect piracy risks using satellite and IoT data.

Why this matters

The synergy of LLMs and AI agents isn’t just about smarter algorithms—it’s about creating resilient, sustainable maritime ecosystems. From reducing fuel costs to safeguarding crews, OceanAI is at the helm of this transformation.

Explore how we’re redefining maritime innovation at OceanAI.